Copyright © 2007,2008 Maxime Morge and members of the MARGO Project

| Context | ||

| What is MARGO? | ||

| Authors | ||

| Acknowledgement | ||

| Copyright | ||

| Include | ||

| Installation | ||

| Usage | ||

| Products: | eAuctionTool | JMARGO |

| Example | ||

| Bug report | ||

| Bibliography |

The ARGUGRID project have two generic aims. We want to provide the representation of the agents' overall internal state and we want to provide computational frameworks for the automatisation of the agents' internal reasoning. This representation is meant to be suitable for deploying the agents to support grid-based applications and in particular service selection and composition in these applications, and for engaging the agents in virtual organisations. The computational frameworks for the agent reasoning needs to support the argumentative features required to deal with the multitude of choices available to the agents (within the given applications) and to deal with the negotiation and conflict resolution posed by virtual organisation. It also needs to be computationally viable.

In this document, our objective is to present the computational counterpart of the argumentative process that we propose in ARGUGRID. We propose a Prolog prototype implementation encompassing all features of the dialectical argumentation model and the representation of the agents' overall internal state. Being a logic-based programming language, Prolog allows for easy prototyping and testing. Moreover, GOLEM, an extension of PROSOCS, on which argumentative agents will need to be plugged, already includes APIs for Prolog.

Developed for the ARGUGRID project, MARGO implements an argumentation-based mechanism for decision making detailed in(Morge ArgMAS 2008). A logic language is used as a concrete data structure for holding statements representing knowledge, goals, and decisions of an agent. Different qualitative and quantitative priorities are attached to these items, corresponding to the probability of the knowledge, the preferences between goals, and the expected utilities of decisions. These concrete data structures consist of information providing the backbone of arguments. Due to the abductive nature of decision making, we built arguments by reasoning backwards, possibly by making suppositions. Moreover, arguments are defined as tree-like structures. In this way, MARGO evaluates the possible decisions, suggests some solutions, and provides an interactive and intelligible explanation of the choice made.

The authors and the maintainers of this software are:

This work is supported by the Sixth Framework IST programme of the EC, under the 035200 ARGUGRID project.

COPYRIGHT of all files Maxime Morge and Paolo Mancarella (C) 2007-2008. All rights reserved. Exception for the following files:

This package contains the following softwares:

This package includes the following external libraries :

In order to install MARGO, you have to perform the following steps:

Additionnaly, if you want to use JMARGO, you will have to consider the following steps:

bin/env.sh (alternatively in

bin\env.bat) must be modified, in

particular JAVA_BIN, JAVA_DOC,

PL_BIN and MARGO_DIR.bin/run_JMARGO.sh (alternatively

bin\run_JMARGO.bat). :-compile('../src/margo.pl'). :- multifile decision/1, incompatibility/2, sincompatibility/2,

goalrule/3, decisionrule/3, epistemicrule/3,

goalpriority/2, decisionpriority/2, epistemicpriority/2,

assumable/1, confidence/2.

example.pl

load it into a Prolog shell (e.g. by typing sicstus in a

terminal and once Prolog is running, type [example] in

order to load the MARGO and the example.admissibleArgument(+CONCLUSION,?PREMISES,?ASSUMTIONS);We present here the final products built upon MARGO.

eAuctionTool is a software application allowing to decide if an electronic-auction is suitable for a procurement. This tool is inspired by the eAuction decision tool provided by the office of government commerce.

For using this tool, you need of:

How to set it up ? Modifiy the variables in bin/env.sh

(alternatively in bin\env.bat).

How to run it ? Execute the script bin/run_eAuctionTool.sh

(alternatively bin\run_eAuctionTool.bat).

How to compile it ? Execute the script bin/built_eAuctionTool.sh

(alternatively bin\built_eAuctionTool.bat).

How to generate the Java API ? Execute the script

bin\document_eAuctionTool.sh (alternatively

bin\document_eAuctionTool.bat).

You simply need to answer, when it is possible, to the questions. Please note that, contrary to the OGC tool, an answer for each question is not required. Then, you will see the different criteria (decision points, steps, conclusion) light up red, green, amber, or black.

Obviously, you can save/load your case (See File> Save).

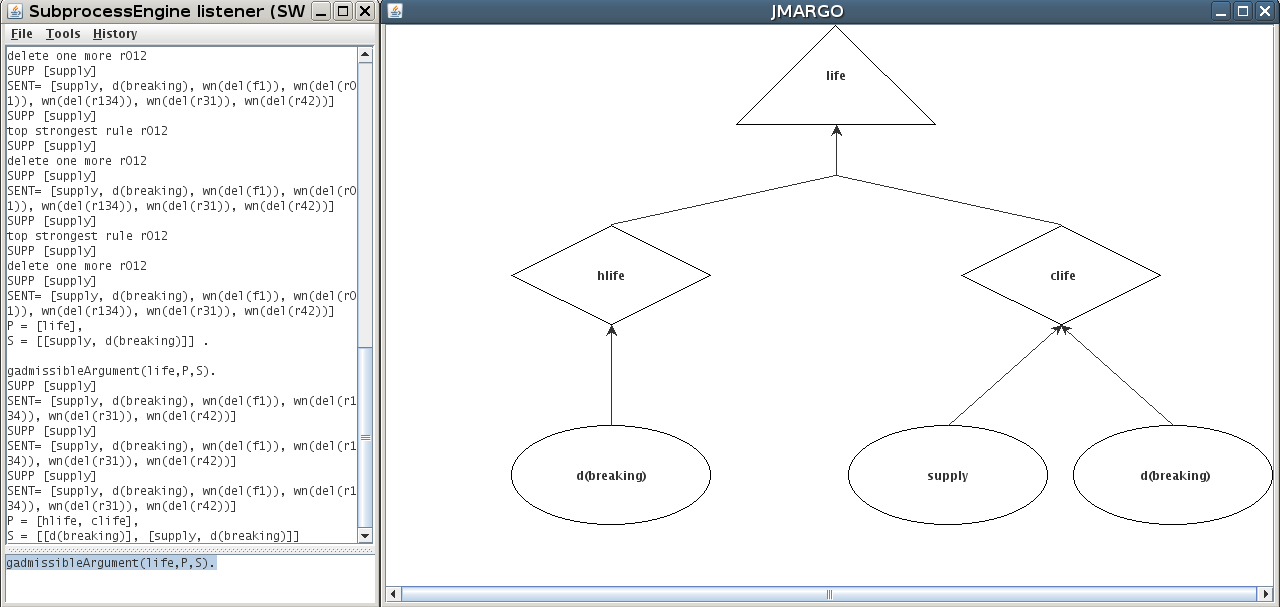

JMARGO is a graphical user interface for MARGO. This tool is built upon:

For using this tool, you need of:

You simply need to:

gadmissibleArgument(c,PREMISES,SUPPOSITIONS).,

where c is an atom. The graphical representation of the

argument appears.We present here an example about an agent arguing over what action should be taken in the particular moral dilemma.

Hal is diabetic. Through no fault of his own, he

has lost his supply of insulin and needs to urgently take some to stay

alive. Hal is aware that Carla has some insulin kept in her house, but

Hal does not have permission to enter Carla's house. The question is

whether or not Hal is justified in breaking into Carla's house in

order to get some insulin to save his life. The main goal, that

consists in ``taking a moral decision'', is addressed by a decision,

\ie a choice between breaking or not into Carla's house in order to

get some insulin. The main goal (moral}) is split into

sub-goals: an abstract subgoal (life, i.e. respect for life)

and a concrete sub-goals (prop, i.e. respect for propriety).

The former is split into two concrete sub-goals (hlife, i.e.

respect Hal's life, and clife, i.e. respect Carla's

life). Actually, Carla will die if she is diabetic and she is left

with no insulin. The knowledge about the situation is expressed with

propositions such as: diabetic (Carla is diabetic), or

supply (Carla has ample supplies of insulin).

The file which contains the domain description is

represented in Figure 1. According to the goal

theory, the achievement of both life

and prop is required to reach

moral. Due to the priorities, this constraint can be

relaxed and the achievement of life is more important

than the achievement of prop to reach moral.

According to the epistemic theory, the agent has conflicting beliefs

about the fact that Carla is diabetic. Due to the

priorities, f1 is more plausible

than f2. Since we do not know if Hal takes Carla's

insulin will leave her any insulin, supply is an

assumption. According to the decision priorities, leaving

(r41) or breaking out Carla's house if she is diabetic

and has ample supplies of insulin (r42) are more credible

than breaking out Carla's house without information about her supplies

(r43).

% ==================================================== %

% DIABETIC DILEMMA %

% from %

% Morge, M and Mancarella P. Modèle d'argumentation %

% pour le raisonnement pratique. Proc. of the Journées %

% Francophones Planification, Décision, Apprentissage %

% pour la conduite de systèmes (JFPDA'07), p 123-134, %

% Grenoble, France 2007. BibTeX.fr Slides. %

% ==================================================== %

:- compile('../src/margo.pl').

:- multifile decision/1, incompatibility/2, sincompatibility/2,

goalrule/3, decisionrule/3, epistemicrule/3,

goalpriority/2, decisionpriority/2, epistemicpriority/2,

assumable/1, confidence/2.

%moral

goalrule(r012,moral,[life, prop]).

goalrule(r01,moral,[life]).

%life

goalrule(r134,life,[hlife, clife]).

goalrule(r13,life,[hlife]).

%prop

decisionrule(r22, prop, [d(leaving)]).

%hlife

decisionrule(r31, hlife, [d(breaking)]).

%clife

decisionrule(r40, clife, [d(nothing)]).

decisionrule(r41, clife, [d(leaving), diabetic]).

decisionrule(r42, clife, [d(breaking), diabetic, supply]).

%diabetic

epistemicrule(f1,diabetic,[]).

epistemicrule(f2,sn(diabetic),[]).

%priority

goalpriority(r012, r01).

goalpriority(r134, r13).

epistemicpriority(f1,f2).

decision([d(breaking), d(leaving)]).

assumable(supply).

admissibleArgument(moral,P,A) returns:

P = [lifle];A = [d(breaking), supply].P can be understood as the strongest combination of

sub-goals which can be reached by an alternative. This sub-goal can

be challenged.

admissibleArgument(life,P,A) returns:

P = [hlife, clife];A = [d(breaking)].If you run

JMARGO, gadmissibleArgument(life,P,A) will draw the

following argument

If there are any rough areas or problems, please

feel free to report them and assist us in correcting them.

Bibliography

(Morge ArgMAS 2008) Maxime Morge. The hedgehog and the Fox. An Argumentation-Based Decision Support System. Argumentation in Multi-Agent Systems: Fourth International Workshop ArgMAS, Revised Selected and Invited Papers. Iyad Rahwan and Simon Parsons and Chris Reed (eds). Lecture Notes in Artificial Intelligence, Volume 4946, Springer-Verlag, Berlin, Germany, 2008.